Hyperparameter Tuning¶

Determined provides state-of-the-art hyperparameter tuning through an intuitive interface. The machine learning engineer simply runs an experiment in which they:

Configure hyperparameter ranges to search.

Instrument model code to use hyperparameters from the experiment configuration.

Specify a searcher to find effective hyperparameter settings within the predefined ranges.

Configuring Hyperparameter Ranges¶

The first step toward automatic hyperparameter tuning is to define the hyperparameter space, e.g., by listing the decisions that may impact model performance. For each hyperparameter in the search space, the machine learning engineer specifies a range of possible values in the experiment configuration:

hyperparameters:

...

dropout_probability:

type: double

minval: 0.2

maxval: 0.5

...

Determined supports the following searchable hyperparameter data types:

int: an integer within a rangedouble: a floating point number within a rangelog: a logarithmically scaled floating point number—users specify abaseand Determined searches the space of exponents within a rangecategorical: a variable that can take on a value within a specified set of values—the values themselves can be of any type

The experiment configuration reference details these data types and their associated options.

Instrumenting Model Code¶

Determined injects hyperparameters from the experiment configuration

into model code via a context object in the Trial base class. This

TrialContext object exposes a

get_hparam() method that takes the

hyperparameter name. At trial runtime, Determined injects a value for

the hyperparameter. For example, to inject the value of the

dropout_probability hyperparameter defined above into the

constructor of a PyTorch Dropout layer:

nn.Dropout(p=self.context.get_hparam("dropout_probability"))

To see hyperparameter injection throughout a complete Trial implementation, refer to the PyTorch MNIST Tutorial.

Specifying the Search Algorithm¶

Determined supports a variety of hyperparameter search algorithms. Aside from the single searcher, a

searcher runs multiple trials and decides the hyperparameter values to

use in each trial. Every searcher is configured with the name of the

validation metric to optimize (via the metric field), in addition to

other searcher-specific options. For example, the (state-of-the-art) adaptive_asha searcher,

suitable for larger experiments with many trials, is configured with the

maximum number of trials to run, the maximum training length allowed per

trial, and the maximum number of trials that can be worked on

simultaneously:

searcher:

name: "adaptive_asha"

metric: "validation_loss"

max_trials: 16

max_length:

epochs: 1

max_concurrent_trials: 8

For details on the supported searchers and their respective configuration options, refer to Hyperparameter Tuning With Determined.

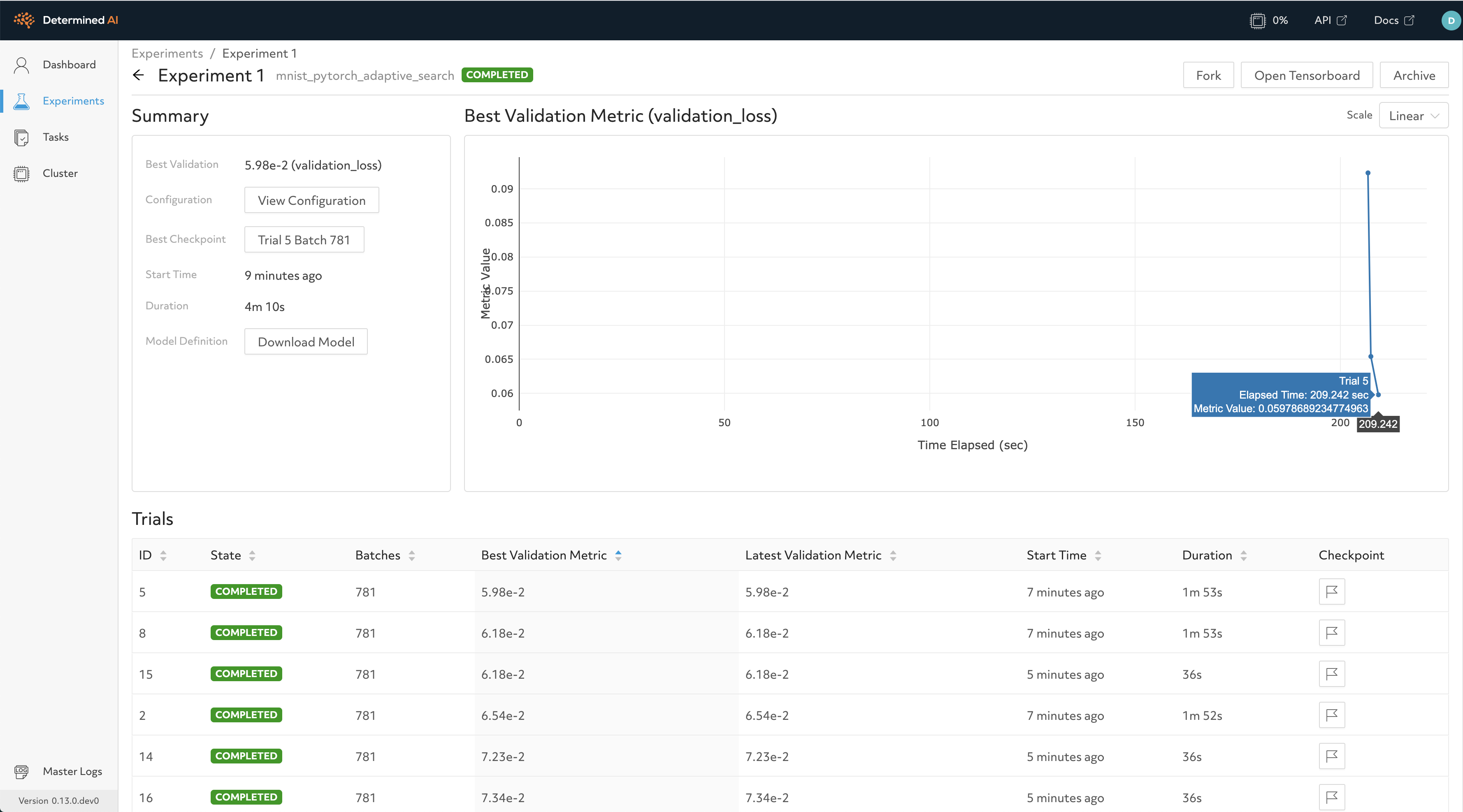

That’s it! After submitting an experiment, users can easily see the best validation metric observed across all trials over time in the WebUI. After the experiment has completed, they can view the hyperparameter values for the best-performing trials and then export the associated model checkpoints for downstream serving.