Native API (experimental)¶

The Native API allows developers to seamlessly move between training in a local

development environment and training at cluster-scale on a Determined cluster.

It also provides an interface to train tf.keras and tf.estimator models

using idiomatic framework patterns, reducing (or eliminating) the effort to

port model code for use with Determined.

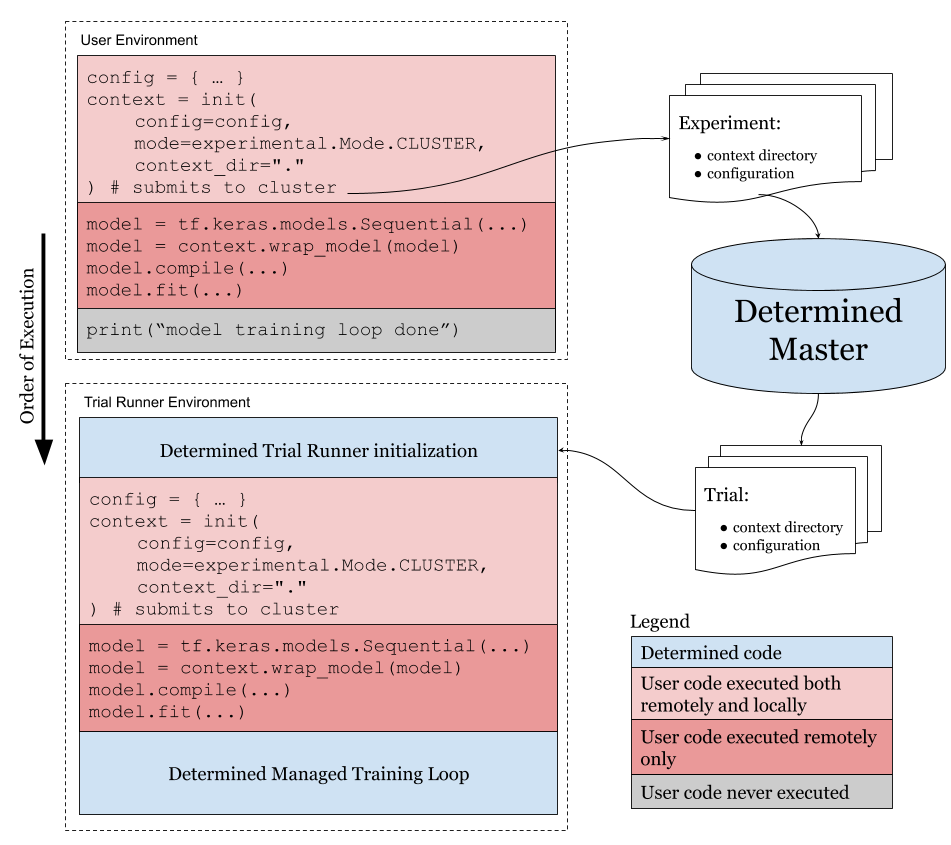

In this guide, we’ll cover what happens under the hood when a user submits an experiment to Determined using the Native API. This topic guide is meant as deep-dive for advanced users – for a tutorial on getting started with the Native API, see Native API Tutorial (Experimental).

Life of a Native API Experiment¶

The diagram above demonstrates the flow of execution when an experiment is

created in determined.experimental.Mode.CLUSTER mode.

The Python script will first be executed by us in the User environment, such

as a Python virtualenv or a Jupyter notebook where the determined Python

package is installed. When the code is executed, init() will create an

experiment by submitting the contents of the context directory and the

experiment configuration in a network request to

the Determined master. Note that any code that comes after the init() call

(typically involving defining the model and training loop) is not executed in

the User environment. In the case of tf.keras, building and compiling the

model is only done on the Determined cluster.

Once the Determined master has initialized a Trial Runner environment, the

user script is re-executed from the beginning using runpy.run_path. In the trial

runner environment, init will return a determined.NativeContext

object that holds information specific to that trial (e.g. hyperparameter

choices) and continue executing. Once the script hits the training loop

function (in this case, tf.keras.Models.fit), Determined will launch into

the managed training loop.

Warning

Any user code that occurs after the training loop has started will never be executed!

High-Level Training Loop Support¶

In order to execute a custom Python script, Determined requires that the user script contains a high-level training loop that it can intercept. Currently, the following training loop functions are supported:

If you are a PyTorch user, there is no standardized high-level training loop to hook into. However, it is still possible to submit experiments from Python by using the Integration with the Trial API.

Integration with the Trial API¶

The Native API can also be used to submit experiments using the

determined.pytorch.PyTorchTrial, determined.keras.TFKerasTrial, or determined.estimator.EstimatorTrial APIs. To

do so, write a script that passes our Trial class definition and

configuration to determined.experimental.create() and execute it in

determined.experimental.Mode.CLUSTER

mode.

determined.experimental.create() replaces init() in the lifecycle

diagram above. Similar to init(), create() will stop execution in the

User environment to submit the experiment via a network request to the

Determined master. However, create() will not return a context object,

because it encapsulates all the necessary information for the Determined

training loop. Python code that comes after create() will neither be

executed in the User environment nor in the Trial Runner environment.